AI Inference Market Size, Share, Trends, Industry Analysis Report

: By Compute, Memory (DDR and HBM), Deployment, Application, and Region (North America, Europe, Asia Pacific, Latin America, and Middle East & Africa) – Market Forecast, 2025–2034

- Published Date:Aug-2025

- Pages: 125

- Format: PDF

- Report ID: PM5561

- Base Year: 2024

- Historical Data: 2020-2023

Market Overview

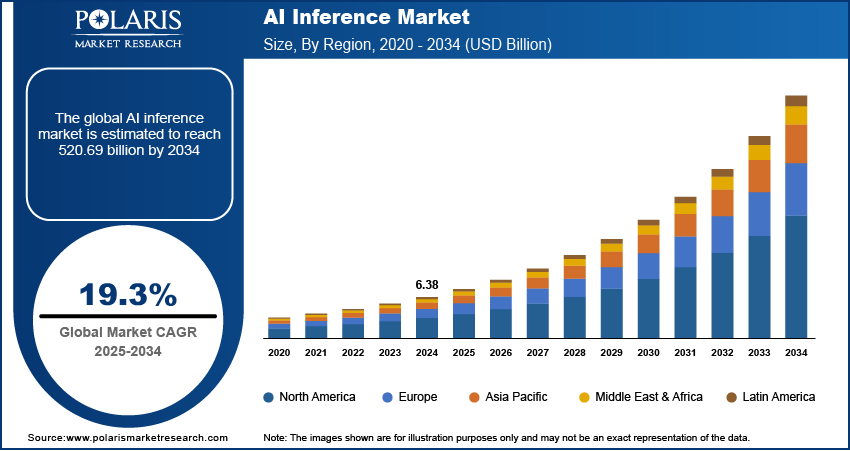

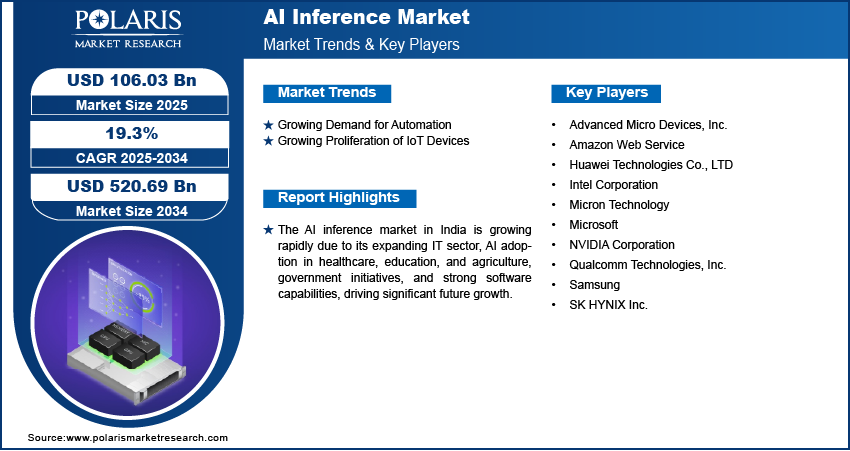

The AI inference market size was valued at USD 89.19 billion in 2024, growing at a CAGR of 19.3% during the forecast period. The market role is primarily driven by the expanding role of AI inference across industries and rapid advancements in edge computing.

Key Insights

- The GPU segment is projected to witness significant growth, owing to the high efficiency in AI inference tasks.

- The HBM segment led the market in 2024, as it enables AI models to carry out quick and efficient processing of large data volumes.

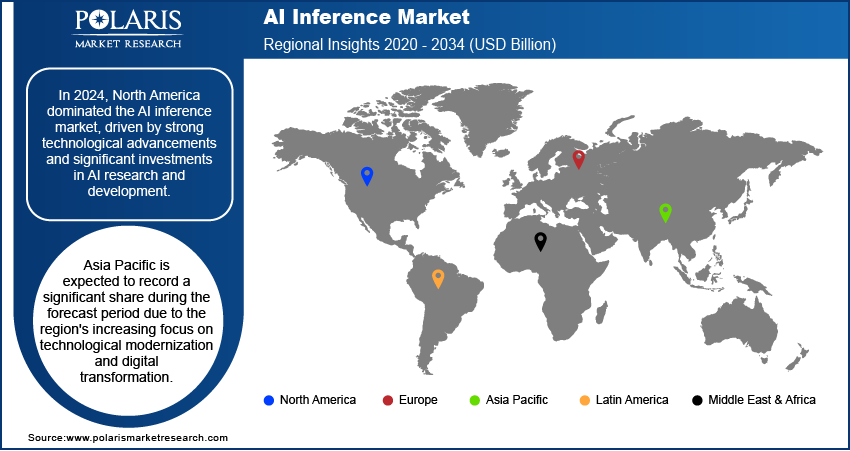

- North America accounted for the largest market share in 2024. The regional market dominance is attributed to its strong investments in AI R&D.

- Asia Pacific is projected to witness significant growth, primarily driven by the region’s increased emphasis on digital transformation and technological modernization.

Industry Dynamics

- The increasing reliance of businesses on AI inference for the automation of complex processes is driving market growth.

- The rising proliferation of IoT devices has created an increased demand for AI inference for analyzing data locally and making quicker decisions.

- Growing emphasis on real-time generative AI deployment is projected to create several market opportunities.

- High costs associated with specialized AI hardware and infrastructure are presenting market challenges.

Market Statistics

2024 Market Size: USD 89.19 billion

2034 Projected Market Size: USD 520.69 billion

CAGR (2025-2034): 19.3%

North America: Largest Market in 2024

AI Impact on AI Inference Market

- Advancements in AI architectures accelerate inference speed. This helps lower latency for applications like autonomous driving, edge computing, and real-time analytics.

- AI-guided hardware optimization enables efficient chip designs that balance performance with reduced power consumption.

- Automated model compression and pruning techniques powered by AI improve inference efficiency on resource-constrained devices.

- Continuous AI innovation in workload orchestration supports scalable deployment of inference across cloud, edge, and on-device environments.

To Understand More About this Research: Request a Free Sample Report

AI inference refers to the process of using a trained AI model to make predictions or decisions based on new input data. It typically involves running the model on specific hardware or software to generate results quickly and efficiently.

AI inference is becoming essential in industries that require quick decision-making, such as healthcare, automotive, and finance. These industries use AI models to process real-time data and make immediate predictions or actions. In autonomous vehicles, AI inference helps in making split-second decisions for navigation and safety. Similarly, in healthcare, AI models process medical images and diagnostics instantly, improving patient outcomes. This demand for real-time AI decision-making drives the need for faster, more efficient inference solutions, thereby driving the AI inference market demand.

Advancements in edge computing are driving the AI inference market growth. Edge computing refers to performing data processing closer to where it's generated, such as on devices such as smartphones, cameras, or IoT sensors. This reduces the need for sending data back and forth to centralized cloud servers, thus decreasing latency and improving speed. In AI inference, this means that models are able to make predictions directly on these devices, enabling faster response times and more efficient use of resources. Businesses and consumers benefit from lower costs and faster processing with edge computing, which drives the adoption of AI inference technologies in applications ranging from smart homes to industrial automation, which in turn is driving the market.

Market Dynamics

Growing Demand for Automation

AI-powered automation is transforming industries by reducing the need for human intervention in repetitive tasks. From automated manufacturing lines to AI-driven customer service chatbots, businesses are increasingly relying on AI inference to automate complex processes. According to the International Federation of Robotics, in 2023, 4,281,585 automated robot units were operating in factories worldwide, which is an increase of 10% from 2022. This technology allows machines to perform tasks more quickly, accurately, and consistently than humans, leading to increased productivity and reduced operational costs. The demand for fast and efficient AI inference systems is growing as more businesses embrace automation, thereby driving the growth of the AI inference market.

Growing Proliferation of IoT Devices

The growth of the Internet of Things (IoT) has led to an explosion of devices capable of generating vast amounts of data. These devices, ranging from smart home gadgets to industrial sensors, require AI inference to analyze the data locally and make quick decisions. For example, a smart thermostat uses AI inference to adjust temperatures based on environmental conditions. As the number of IoT devices increases, the demand for AI inference technologies grows, enabling faster, on-device decision-making, thereby driving the AI inference market expansion.

Segment Analysis

Market Assessment by Compute

The AI inference market segmentation, based on compute, includes GPU, CPU, FPGA, NPU, and others. The GPU segment is expected to witness significant growth during the forecast period. GPUs excel at parallel processing, making them highly efficient for AI inference tasks that require fast computation, such as image recognition, language processing, and data analysis. Their ability to handle multiple operations simultaneously leads to quicker results, especially in complex AI models. The increasing adoption of AI for real-time decision-making and automation is driving the higher demand for GPUs in AI inference applications, thereby driving the segmental growth in the market.

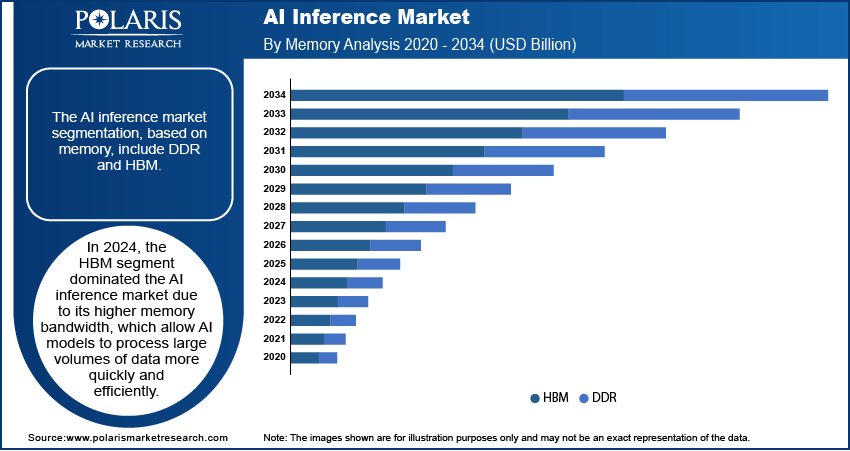

Market Evaluation by Memory

The AI inference market segmentation, based on memory, includes DDR and HBM. The HBM segment dominated the market in 2024. HBM is designed to provide higher memory bandwidth, allowing AI models to process large volumes of data more quickly and efficiently. This high-performance memory is essential for tasks that require rapid data access, such as real-time AI inference applications. HBM enables faster computations by reducing data bottlenecks, making it ideal for AI models that involve complex algorithms or large datasets. Due to these advantages, the HBM segment is growing in the market.

Regional Insights

By region, the study provides the AI inference market insights into North America, Europe, Asia Pacific, Latin America, and the Middle East & Africa. In 2024, North America dominated the market, driven by strong technological advancements and significant investments in AI research and development. The presence of major tech companies, such as Google, Microsoft, and Nvidia, accelerates AI innovation, fostering widespread adoption across industries. The region also has a well-established infrastructure, enabling faster deployment of AI solutions for sectors such as healthcare, finance, and automotive. Additionally, the government’s growing interest in AI applications for national security, healthcare, and public services is further boosting the demand for AI inference technologies, thereby driving the growth of the AI inference market in the region.

Asia Pacific is expected to record a significant AI inference industry share during the forecast period due to the region's increasing focus on technological modernization and digital transformation. Countries such as China, Japan, and South Korea are making significant strides in AI development, driven by large-scale government initiatives, technological investments, and a booming tech startup ecosystem. The rise in AI adoption for applications such as e-commerce, smart cities, and industrial automation contributes to the growth in demand for AI inference chips. Additionally, the region’s large population creates a vast amount of data, which drives the need for efficient AI inference solutions to manage and process it effectively, driving the market in Asia Pacific.

The AI inference market in India is experiencing substantial growth due to its growing IT sector and increased adoption of AI in various industries, including healthcare, education, and agriculture. The country is focusing on AI to address challenges such as improving healthcare accessibility, boosting agricultural productivity, and improving digital education. India's large, tech-savvy workforce and strong software development capabilities make it an ideal hub for AI innovation. The government’s initiatives to promote AI research, along with increasing investments in AI infrastructure, are accelerating the demand for AI inference solutions in the country.

Key Players & Competitive Analysis Report

The AI inference market is constantly evolving, with numerous companies striving to innovate and distinguish themselves. Leading global corporations dominate the market by leveraging extensive research and development, and advanced techniques. These companies pursue strategic initiatives such as mergers and acquisitions, partnerships, and collaborations to enhance their product offerings and expand into new markets.

New companies are impacting the industry by introducing innovative products to meet the demand of specific market sectors. According to the AI inference industry analysis, this competitive trend is amplified by continuous progress in product offerings. Major players in the market include NVIDIA Corporation; Advanced Micro Devices, Inc.; Intel Corporation; SK HYNIX Inc.; Samsung; Micron Technology; Qualcomm Technologies, Inc.; Huawei Technologies Co., LTD; Microsoft; and Amazon Web Service.

Microsoft is a multinational technology company headquartered in Redmond, Washington. Microsoft offers various products and services, including operating systems, productivity software, gaming consoles, and cloud-based solutions. Its flagship product, Microsoft Windows, is the world's most widely used operating system. Other popular products include Microsoft Office, Skype, and the Xbox gaming console. Microsoft has invested heavily in artificial intelligence (AI) and machine learning technologies in recent years. The company has been using AI to improve its products and services and developing new AI-based applications. For Instance, Microsoft's Cortana virtual assistant uses machine learning to provide personalized recommendations and insights to users. Microsoft has also developed several AI-based products and services, including the Azure Machine Learning platform, which allows developers to build, deploy, and manage machine learning models at scale. The company has also developed AI tools for healthcare, such as Microsoft Healthcare Bot, which helps patients get answers to their health-related questions. Furthermore, Microsoft is actively involved in AI research and development and has established partnerships with leading universities and research institutions worldwide. Microsoft’s AI inference solutions deliver high-performance, scalable cloud-based tools for real-time decision-making, leveraging Azure AI, ONNX, and optimized hardware to accelerate model deployment across industries.

Qualcomm Technologies, headquartered in San Diego, California, has been into wireless technology since its establishment in 1985. Initially focused on CDMA technology, it now offers solutions in 5G, IoT, and other advanced communication technologies. Qualcomm operates on a fabless manufacturing model, emphasizing research and development while outsourcing production. Its innovations, including the Snapdragon system-on-chip and Gobi modems, have shaped mobile communications and supported advancements in AI, machine learning, and edge computing. Qualcomm remains at the forefront of wireless technology, driving progress in connectivity and digital transformation with over USD 16 billion invested in R&D. The beginning of 5G has amplified Qualcomm's role in improving data speeds, reducing latency, and expanding IoT applications across industries such as automotive, healthcare, and smart cities. The company's technologies enable seamless device connectivity and support the vision of an interconnected "Internet of Everything." Strategic partnerships and a licensing program with over 190 companies worldwide further extend Qualcomm's influence, fostering innovation and growth within the wireless ecosystem. Its commitment to developing cutting-edge solutions continues to shape the future of communication and connected systems. Qualcomm provides Cloud AI100, which is a high-performance AI inference accelerator designed for cloud workloads. It delivers exceptional performance and power efficiency, enabling faster and more efficient AI processing for various applications.

Key Companies

- Advanced Micro Devices, Inc.

- Amazon Web Service

- Huawei Technologies Co., LTD

- Intel Corporation

- Micron Technology

- Microsoft

- NVIDIA Corporation

- Qualcomm Technologies, Inc.

- Samsung

- SK HYNIX Inc.

AI Inference Industry Developments

January 2025: At CES 2025, Qualcomm's AI On-Prem Appliance Solution and AI Inference Suite were launched, enabling businesses to run generative AI workloads on-premises, reducing operational costs and enhancing data privacy.

August 2024: Cerebras launched Cerebras Inference, which was recognized as the fastest AI inference solution, achieving speeds 20 times faster than GPU-based systems, with pricing significantly lower than alternatives.

AI Inference Market Segmentation

By Compute Outlook (Revenue USD Billion, 2020–2034)

- GPU

- CPU

- FPGA

- NPU

- Others

By Memory Outlook (Revenue USD Billion, 2020–2034)

- DDR

- HBM

By Deployment Outlook (Revenue USD Billion, 2020–2034)

- Cloud

- On-Premise

- Edge

By Application Outlook (Revenue USD Billion, 2020–2034)

- Generative AI

- Machine Learning

- Natural Language Processing

- Computer Vision

By Regional Outlook (Revenue USD Billion, 2020–2034)

- North America

- US

- Canada

- Europe

- Germany

- France

- UK

- Italy

- Spain

- Netherlands

- Russia

- Rest of Europe

- Asia Pacific

- China

- Japan

- India

- Malaysia

- South Korea

- Indonesia

- Australia

- Rest of Asia Pacific

- Middle East & Africa

- Saudi Arabia

- UAE

- Israel

- South Africa

- Rest of Middle East & Africa

- Latin America

- Mexico

- Brazil

- Argentina

- Rest of Latin America

Report Scope

|

Report Attributes |

Details |

|

Market Size Value in 2024 |

USD 89.19 billion |

|

Market Size Value in 2025 |

USD 106.03 billion |

|

Revenue Forecast in 2034 |

USD 520.69 billion |

|

CAGR |

19.3% from 2025–2034 |

|

Base Year |

2024 |

|

Historical Data |

2020–2023 |

|

Forecast Period |

2025–2034 |

|

Quantitative Units |

Revenue in USD billion and CAGR from 2025 to 2034 |

|

Report Coverage |

Revenue Forecast, Market Competitive Landscape, Growth Factors, and Industry Trends |

|

Segments Covered |

|

|

Regional Scope |

|

|

Competitive Landscape |

|

|

Report Format |

|

|

Customization |

Report customization as per your requirements with respect to countries, regions, and segmentation. |

FAQ's

The AI inference market size was valued at USD 89.19 billion in 2024 and is projected to grow to USD 520.69 billion by 2034.

The global market is projected to register a CAGR of 19.3% during the forecast period, 2025-2034.

North America had the largest share of the global market in 2024.

The key players in the market are NVIDIA Corporation; Advanced Micro Devices, Inc.; Intel Corporation; SK HYNIX Inc.; Samsung; Micron Technology; Qualcomm Technologies, Inc.; Huawei Technologies Co., LTD; Microsoft; and Amazon Web Service.

The HBM segment dominated the AI inference market in 2024 due to its higher memory bandwidth, which allow AI models to process large volumes of data more quickly and efficiently.

The GPU segment is expected to witness significant growth in the forecast period due to its parallel processing, making them highly efficient for AI inference tasks that require fast computation, such as image recognition, language processing, and data analysis.